As augmented reality and virtual reality gain more respect in the sports realm, there are some issues to iron out, says Thierry Fautier.

Over the last few years, broadcasters have been searching for ways to deliver more immersive video experiences – and AR and VR seem to be the answer. Virtual reality (VR) and augmented reality (AR) technologies have emerged as effective methods to address the need for a more immersive video experience, especially for premium sports events. However, a few challenges must be overcome before widespread deployment can occur.

The first issue with using VR/AR for live sports is the complexity of the workflow. VR production is migrating from 4K to 8K, which requires ensuring 8Kp60 content is delivered with pristine live stitching quality. The industry is nearly there, but there is a high price tag associated with delivering high quality. In addition, equipment size may be a concern for in-stadium and mobile use cases. The alternative is to rely on an all-in-one solution where capture and stitching is done in a single device, which can affect quality.

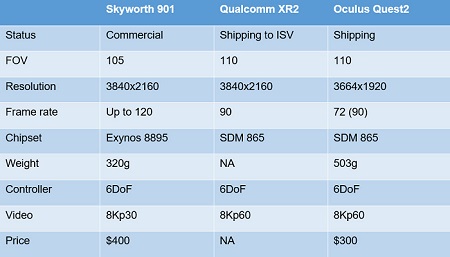

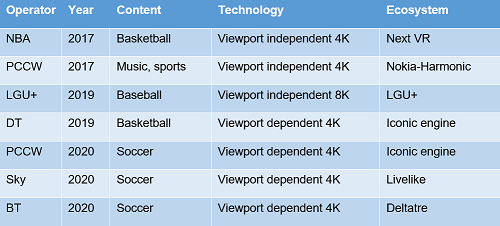

Another challenge for VR is consumer adoption. The first wave of devices, such as Gear VR and Oculus QUEST, offer limited resolution. Newer head-mounted displays (HMDs), such as the Qualcomm XR2, Oculus Quest 2 and Skyworth 901, offer a higher display resolution. As depicted in Table1, there’s been a drastic improvement in quality of experience (QoE), especially for 8K content that can be natively decoded on those devices.

For in-stadium applications, there’s an expectation for low latency between the camera capture and the rendered VR content on the mobile device. Latency can be reduced if video processing is done locally in the stadium with an MEC type of architecture, compared to the public cloud. However, the biggest contributor is the protocol used.

It’s important to use low-latency protocols to deliver content. There are two options. One is to use WebRTC as the distribution scheme, which results in sub-second latency. The second is to use CMAF low latency with either DASH or HLS manifest formats. This provides latency in the same range as for broadcast (around five seconds). The problem with deployed systems is the increased processing complexity. Viewport-dependent devices create long delays that prevent an in-stadium experience, compared with viewport-independent solutions that support existing OTT low-latency solutions.

On the bright side, we’re starting to see HMDs with 8K decoding capability and good display performance at a reasonable price, as well as new 5G phones that support 8K decoding.

Evolution of VR

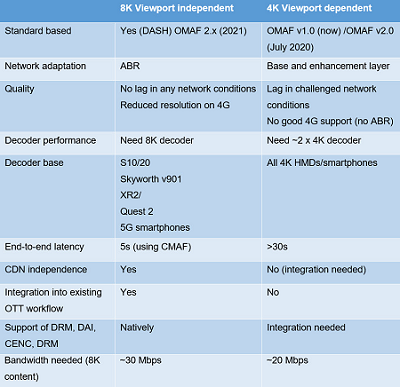

VR processing can be split into two approaches. The first is viewport-dependent, where only the area corresponding to the device’s field of view is sent on the network. The main benefit of this approach is reduced bandwidth; the downside is latency. Each time the user refreshes or moves their head, a portion of the scene is not transmitted. This approach is standardised by MPEG in OMAF v2.0.

The second is viewport-independent, where the full sphere is sent to the device. This approach increases the bitrate but offers the advantage of supporting all devices without requiring any adaptation relying on standard-based OTT protocols. This approach for 4K was standardised in OMAF v1 and has been recommended by the VR Industry Forum in its Guidelines for 8K. There are plans to standardise this approach in the future OMAF version for 8K. Table 2 summarises the differences between the two approaches.

One of the key attributes of a viewport-independent solution is seamless integration in an existing OTT workflow. Operators that want to use low latency, DRM or digital ad insertion systems can support them right out of the gate, without lengthy developments or integration. Another benefit is that any native 8K player can decode the stream, including all new HMDs and 5G smartphones released since 2020. In addition, due to an ABR mechanism, backward compatibility is guaranteed with legacy 4K devices.

In order to support high-bandwidth applications, adequate network infrastructure is needed. At home, a fibre or DOCSIS 3.1 network that offers up to 1Gbps connectivity is ideal. For mobile, a high-capacity network is required – 5G is the perfect fit, with bandwidth ranging from 100Mbps to Gbps, depending on the spectrum used.

One of the myths of VR is that a 5G low-latency network is needed, to avoid motion sickness. This is inaccurate, as the motion-to-photon delay is coming from the application. In the viewport-independent architecture, if the tiles are not arriving on time, a lower-resolution backup background is provided to users during a few of the frames. In a viewport-dependent approach, the content is entirely available to users. Therefore, there is a perceived delay when users move their head.

Current state of AR

Today, AR is mostly used to overlay graphical information in real-time on top of the video watched by users. Soon, the volumetric capture of moving objects within video will be displayed in an overlay watched on smartphones or HMDs. MPEG is very active in the volumetric capture of video and its rendering on consumer devices, with two standards: V-PCC (Video Point Cloud) and MIV (Metadata Immersive Video).

A key element of a consumer AR experience is the use of a smartphone to create an overlay, or a HMD that connects to the end user’s mobile device to create an overlay layer on top of the video played by the mobile device. Expect to see volumetric video applications in the future. The industry is waiting for Apple to work on an XR HMD that will support both AR and VR.

What’s next?

On the VR front, the aggregation of different features – 8K video, multiview, Watch Together, social media – will become the norm. Those technologies are maturing and there will be more commercial deployments on HMDs and 5G smartphones in the coming years. Viewport-dependent technology will be completely integrated into the OTT workflow.

AR is evolving with more video, including V-PCC and MIVS not only being file-based for VOD consumption, but also supporting live for events where a well-known athlete or musical artist is represented like a hologram in front of millions of people. Intel and Canon are pioneering a free-viewport technology, with interesting preliminary results.

We’re only at the tip of the immersive experience iceberg. In a few years, we might be able to watch a live sports event from any angle we choose. Organisations such as MPEG and the VR Industry Forum will play an important role in moving the industry forward.

Beyond VR and AR, the next generation of immersive experiences will involve integrating capabilities such as Watch Together, allowing viewers to watch live sports with friends and chat in a split-screen view during the event.