Transitioning multi-channel QC and monitoring tasks to the cloud generates enormous efficiency gains for broadcasters and MVPDs alike, says Ted Korte General conversation around the digital TV transition often gives off the aura that it was all in a days work. While every TV delivery platform had its unique challenges and timelines, the transition was a multi-stage […]

Transitioning multi-channel QC and monitoring tasks to the cloud generates enormous efficiency gains for broadcasters and MVPDs alike, says Ted Korte

Transitioning multi-channel QC and monitoring tasks to the cloud generates enormous efficiency gains for broadcasters and MVPDs alike, says Ted Korte

General conversation around the digital TV transition often gives off the aura that it was all in a days work. While every TV delivery platform had its unique challenges and timelines, the transition was a multi-stage process with many moving parts. The effects of this challenging transition are still felt around the industry to this day and will be for some time.

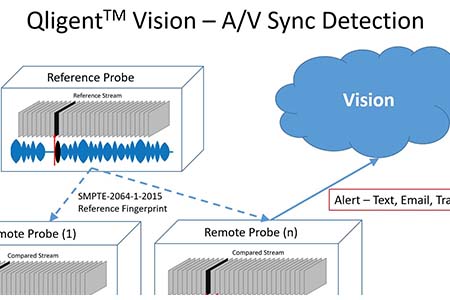

The over-the-air TV broadcaster and the MVPD (multi-video programme distributor) have both been challenged with high-density monitoring environments. Tasked with an ever-expanding universe of signals and data points to monitor, the MVPD landscape more commonly spans across satellite, cable, IPTV and OTT platforms. Signals have many more degrees of freedom, as they are created, combined, modified and distributed over many paths and over a wide variety of distances. From monitoring on-demand subscriber connections to live, linear local broadcast feeds, to satellite connections and contributed IP streams, there is content coming and going in all directions.

Over-the-air TV delivery has similarly evolved. It is more capable and thus more complex than ever, with more programme streams and channels occupying the same UHF and VHF spectrum. Along with higher-density multi-channel programme delivery, broadcasters have a highly intricate air chain to monitor through a complex architecture including encoders, multiplexers, gateways and studio-to-transmitter links. Along with the transmitter itself, these all play critical roles in the overall signal quality that reaches the viewer.

In addition to understanding the health and status of each signal, stream and data point not to mention troubleshooting problem areas that affect signal quality and delivery there is a need to analyse and understand how all of this activity correlates to the viewing experience.

Simply put, there is no easier and more cost-efficient means of achieving this goal than the cloud, whether working in terrestrial or multiplatform systems.

Change in motion

Signal monitoring has never been easy, but the complexity has grown tenfold since the digital transition. In the MVPD world, linear analogue streams entered and exited the cable plant over legacy RF and satellite connections, with little to no variations in bandwidth. Analogue quality of service monitoring leveraged fixed components to manage ghosting and other signal degradations rife with artifacts. Over-the-air broadcasters, meanwhile, dealt mainly with singular programme streams of fixed bandwidth coming in and out of the RF plant.

The introduction of digital signals gave birth to a highly dynamic contribution and distribution environment. Terms like jitter, latency and macroblocking quickly became part of the TV lexicon. In the case of macroblocking, MVPDs and broadcasters quickly had to adjust to monitoring for both compression as well as delivery-related root causes. This became a common problem of the digital age how many bits can you fit into a pipe and ensure they come out correctly at the other end(s)?

Technicians are no longer monitoring deterministic TV signals; they are dealing with highly dynamic data streams that are ever-fluctuating. It is nearly impossible to simply lock down a static monitoring system when dealing with network congestion, buffer overloads or multiplexed architectures moving bits between many encoded streams not to mention understanding how these issues effect each other and ultimately affect the viewing audience. There is no longer a simple one to one correlation.

Shifting universe

The shift away from fixed monitoring a chain of standalone hardware systems dedicated to specific applications and departments and toward software-defined, platform-based monitoring is synonymous with the digital transition. There is no way to justify the expense of separate, dedicated legacy systems for the ever-expanding universe of digital signal monitoring responsibilities, which today crosses into areas like social media activity that simply did not exist just a few years ago.

Software-defined monitoring systems centralise these responsibilities and can be deployed in the cloud, which enables the offload of monitoring responsibilities to an outside managed services layer. This follows the trends and strategies that many IT departments are moving toward today.

Both options bring exceptional value to users in terms of better understanding the health and status of their ecosystem and troubleshooting problem areas that affect signal quality. The richer systems on the market go far deeper, helping operators better analyse and understand how all of this activity correlates to the viewing experience and, ultimately, viewer satisfaction.

However, while on-premises systems bring exceptional value, monitoring in the cloud amplifies the benefits tenfold through improved collaboration, reduced expenses, better scalability and a quicker return on investment.

Sun in the clouds

In addition to offloading the complexity of load balancing, redundancy, reliability and maintenance, one of the biggest benefits of monitoring in the cloud is its economy of scale across a large network, eliminating the silos of responsibility often found with on-premise strategies.

The ROI of working in the cloud for the MVPD or the over-the-air network operator quickly accelerates upon surveying the overall contribution and distribution landscape. In todays multi-distribution model environment, the typical structure spreads monitoring across a series of concentric rings, from the central studio onto regional headends and local sub-headends. Data points to monitor can quickly scale from the tens of thousands, the deeper we get into the distribution chain and through the last mile, potentially well into the millions.

Too often, the segmentation of monitoring responsibilities by department or facility prevents useful information from easily carrying over to the next step in the chain. One example is making the connection between how a digital file or stream enters a facility to the point where it is re-encoded for various distribution streams. It is not uncommon to re-encode an incoming stream into 10 variants, all of which require a pre-distribution quality check.

Downstream, these 10 variants are multiplexed with specific metadata into a multi-programme transport stream en route to the next facility typically a regional headend or local sub-headend, where streams are further manipulated to accommodate local ad insertions. When something went wrong in the past, it was typically related to an equipment issue; today, the root cause analysis can be tied to a combination of multiple performance issues. A heavy compression job from an encoding bank? IP packet loss due to high jitter? A high-bandwidth multiplexed stream combined with a low RF signal in the same pipe? A faulty transmitter module or power supply?

The real problem isnt any one single issue; its the appearance and disappearance of all of these problems over a specific time period. Operators wind up chasing ghosts as problems come and go, and evidence is wiped away. The end result is a frustrated staff, dissatisfied advertisers and an agitated viewing audience.

The cloud is the most effective platform to help users profile what is happening across all these issues, and correlating thousands of data points with performance. Some pertinent examples include:

Bitrate levels across multiple streams, with data pinpointing when signals fluctuate with noticeable effect

Notations on when signals go through any kind of transformation, such as encoding, multiplexing or physical layer boundary

Intermittent signal drops due to overbuffering or packet loss in the IP domain

Working in the cloud provides added power through performance trends over time. With detailed trending, operators can understand the true root cause and related effect, and make the necessary adjustments to encoder compression, multi-stream bitrates and more. For terrestrial operations, the ability to analyse multiple IP, ASI and other streams ahead of the transmitter concurrently with the RF and decoded audio/video streams coming out allows users to quickly identify and address the source of the problem, ensuring unprecedented accuracy across root cause analysis, impact valuation and corrective actions.

In a multi-stream universe, it is no longer possible to myopically look at system components independently. It is a complete ecosystem akin to a musical orchestra, comprehending how the various instruments work together in sync to form a cohesive result.

End point analysis

Perhaps the most effective use of a cloud system is better correlating the millions of data points collected over the last mile. The closer we get to the end point, the better we can understand how long a signal issue endured, and how many viewers tuned out.

Using a software-defined cloud platform offers the ability to look across the path and the layers of a stream to collect clues necessary for root cause analysis and deterministic action. This not only delivers the operational efficiency and equipment savings of the cloud, but can help reduce transmitter downtime for broadcasters, protecting TV operations from fines and lost ad revenue.

The latter protections also apply to MVPDs, who can further save money by eliminating the costs of dispatching trucks across the region.

Todays issues are rooted around optimising interoperability, configurations and workflow, and increasingly focus less on equipment failure than an NMS solution did for us 20-30 years ago.

The possibilities of cloud monitoring, particularly when deployed as MaaS (monitoring as a service) are endless. Cloud deployment also enables outsourcing the day-to-day monitoring and firefighting, as well as rapid access to subject matter and vendor equipment experts. This reduces the time-consuming process of evaluating and addressing signal quality issues that are becoming more and more intermittent. As MVPDs and over-the-air networks grow, working on the cloud makes scaling simple especially if the vendor in question relies on commercial off-the-shelf equipment for most of the infrastructure.

Ultimately, the continued escalation of and reliance on big data will help users better understand how a performance issue affected viewers, providing a rich set of details to prevent similar issues in the future, and ensure audience and customer retention over the long term. The cloud will not only save money on deployment and in operations, it will also help MVPDs, broadcasters and TV network operators generate new revenue opportunities through these efficiencies with the import of ratings data, social media activity, subscriber data and advanced OTT and mobile services. With the cloud, there is no longer a reason to address these many tasks in bits and pieces.